This post is aimed at Cubase MIDI Remote users, who have experienced the issue. It’s also requires reasonably good understanding of MIDI and the Cubase MIDI Remote.

The Problem

The Cubase MIDI Remote, first introduced in Cubase 12, is a fantastic addition for remote control enthusiasts like me. While the prior Generic Remote (still working, but now declared “legacy”) was useful but more limited and required some extra mental gymnastics to understand and use.

However, as of this writing (using Cubase 13.0.41) there’s still a killer flaw when using motorized faders (or endless encoders bi-directionally in “absolute mode”).

Motorized Fader Symptoms

When using the MIDI Remote bi-directionally with motorized faders, it feels like the faders are “pushing back” in the opposite direction from the user initiated movement. i.e. if you push up, the motorized faders appear to be pushing back down. And if you pull down, the motorized faders appear to be pulling back up.

Endless Encoder Symptoms

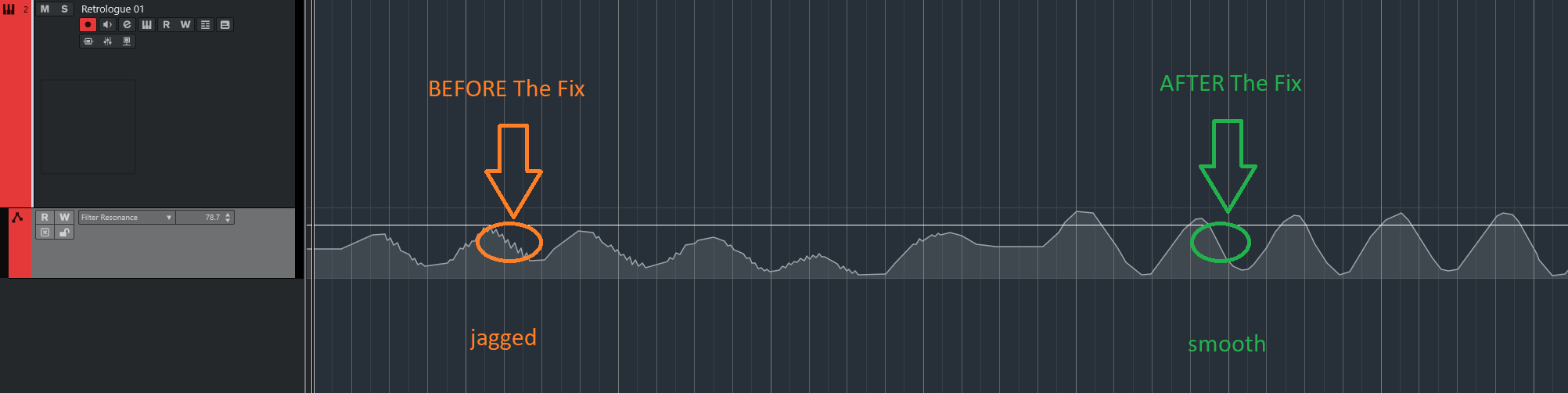

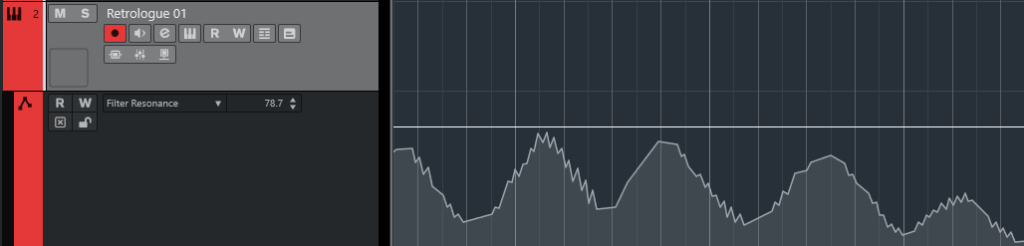

When using endless encoder in “absolute” mode, the target control seems jittery and if VST automation is being recorded, the line looks jagged.

What’s going on?

In addition to receiving MIDI messages from external hardware and to control software parameters in VST plugins and Cubase itself, the Cubase Remote also sends MIDI messages to the configured controller hardware (if allowed to do so).

Why sending current values is important, if you have hardware that can use it:

- We need this for motorized faders so the faders move to the correct position, when the software parameter changes.

- We want this for endless encoders, that display incoming values and also to allow the endless encoder to smoothly pick up from the current parameter value in the controlled software.

This is useful and looks impressive, when you change presets or tracks in the software, because it allows the hardware to immediately mirror the software settings for the connected parameters.

And then moving a fader or endless encoder smoothly picks up exactly where the software currently is – without any unpredictable or unpleasant jumps. It’s like having a great hardware synth or FX unit under your fingertips.

Conceptual Sequence of Events

Here’s how I conceptualize what’s happening behind the scenes. There are two independent processes happening all the time:

Hardware sending messages to software

- Hardware control is being moved

- Hardware sends MIDI message

- MIDI Remote receives MIDI message and sends a VST automation message to the connected VST or to Cubase itself (e.g. channel volume or pan, etc).

- The VST plugin sets the new value for that parameter.

Software sending messages to hardware

- A VST plugin (or a Cubase channel) parameter is being changed by one of several events, including:

- A new preset is being loaded

- The user moves a control in the GUI

- The VST has some internal movement (like LFO) triggering changes

- The VST has received a direct MIDI message (rather than a VST automation event)

- Cubase sends a VST Automation event from a VST automation track

- The MIDI Remote sends a VST Automation event because a hardware control moved.

- The VST plugin informs Cubase, that is has a new value for that parameter.

- The MIDI Remote is watching for all of those parameter has changed messages from VSTs and Cubase itself, and promptly sends the associated MIDI message out to each of the configured hardware controllers. Yes, there can be multiples – and that’s part of the complication.

While I don’t know the internal programming of Cubase, I suspect that the MIDI Remote is using independent worker processes for the sending and receiving parts of the work – and that makes all the sense in the world. It’s kind of analogous to two or more different people or departments in a company being responsible for incoming and outgoing mail. Organizing things that way generally makes things faster and easier to manage.

The echo latency problem

So I’m assuming that “outbound” worker has no idea, what triggered the message. So it will send the message to all the connected hardware devices – including the one that originally triggered the parameter change – but there’s a delay between the incoming and outbound messages (also called “latency”). And when that latency is larger than the gap between incoming messages we have a problem.

I’ll try to illustrate by giving a time stamp and a message sequence (A,B,C, …) for each event in an example: We’re starting at exactly zero milliseconds after 12 noon, moving a motorized fader starting from position 75 and pushing higher:

- 12:00:00:000: Hardware control is moved, sending a MIDI CC value 76 (message A)

- 12:00:00:050: MIDI Remote (inbound message worker) translates it to VST automation value and sends it to the VST plugin

- 12:00:00:100: Hardware control keeps moving, sending a MIDI CC value 77 (message B)

- 12:00:00:125: VST has finished it’s internal work and sends and lets Cubase know about the changed VST automation value (message A)

- 12:00:00:150: MIDI Remote (outbound message worker) translates VST automation value to MIDI message and sends a MIDI message (message A, CC Value 76) to each connected hardware control – including the originating hardware (which effectively and echo to that hardware)

- The original hardware sender now has to change it’s internal value from the 77 (message B) it has already moved to and sent out, back to the older 76 (message A). And that’s why it’s pushing back!

So the hardware control gets echos of outdated values back while it’s moving and therefore pushes back and/or sets its internal value to the older value, and now keeps moving from there. It’s kind of a take two steps forward, take one step back motion – that looks jagged when you record it in Cubase VST Automation lanes.

Avoiding the echo latency problem

To avoid this problem we could

- move the controls so slowly that the echo happens faster than a value change at the sending device – that’s pretty obviously stupid and not an actual solution.

or - ask the programmers of the hardware’s firmware to ignore echoed incoming messages – that sounds reasonable, yet might be tricky, depending on the computing power and capability of the hardware’s microprocessors

or - ask the programmers of Cubase not to echo incoming messages back to the sending hardware – that sounds possible and arguably the best place to do it. (Side note: Interestingly the legacy Generic Remote in Cubase does not echo to the sending device – so it does not have this problem.)

One possible excuse for not fixing the problem is, that this is not possible to figure out, what is technically an echo or what is a genuine message from another thing that happened (e.g. a patch change). This would also be a problem in the physical world analogy example, if one decouples the sending and receiving mail rooms.

But there’s a practical solution that cleans up the problem without being “mathematically/logically” correct:

Ignore messages to a hardware control

while it’s sending input

—

or more accurately:

Ignore messages to a hardware control that has sent a message a very short time ago

I think it would be entirely possible to implement this solution either in the Cubase MIDI Remote code or in the firmware of the relevant hardware controllers.

Since neither has happened, I’ve created my own:

Workaround solution to the MIDI Remote Motorized Fader Problem

My work-around works by putting a MIDI processing script between Cubase and all of my hardware controllers that have: Motorized Faders, Touch Strips, Bi-directional Endless Encoders working in absolute mode. The script aims to:

Intercept all remote control traffic (both ways)

and mute corresponding messages to hardware controls that have sent a message a very short time ago.

Conceptually my script does the following:

Intercept all MIDI messages between the hardware controller and the Cubase MIDI Remote, and for each message:

If this MIDI message is from a hardware control

STEP 1: remember what kind of message it is and assign a time stamp to it (when the temporary mute is over); i.e. make/update an entry in a map of name value pairs with

name: (composite key): hardware device, MIDI message type, MIDI Channel, MIDI Message Number e.g. "M3 15 CC 11" which means the message came from Maschine Mk3 on MIDI Channel 16 and was a CC message for Controller 11.

value: mute ending time -- a unix time stamp in seconds down to millisecond accuracy plus a desired mute time (e.g. adding 0.5 to the time stamp mutes incoming messages for half a second)

STEP 2: send the MIDI message to Cubase

If this MIDI message is from the Cubase MIDI Remote

STEP 1: check if we have a matching key in our list (device, channel, message type , message number) AND if yes,

STEP 2 : if the current time is greater than the mute time end for this kind of MIDI message

STEP 3: send this MIDI message - (since it's not an echo)

if the current time is smaller than the mute time end for that kind of message

NO MORE STEPS: ignore this MIDI message (since it's probably an echo)The above algorithm is an attempt to minimize the required mutes, so for example if a preset change occurs in the software, while I’m moving a hardware fader, all of the preset values still get reflected on the other faders – except for the fader I’m currently moving.

On my system with simple testing, a half a second of muting did the trick, but the shortest time will probably depend not only the speed of one’s computer, but also which VSTs are being used. So the mute time may need to be configured differently. Keeping the mute time short minimizes unintended consequences, like ignoring a message that wasn’t an echo.

A real world implementation of the solution

After looking at various options, I ended up using the very useful CoyoteMIDI Pro application as my scripting environment. It’s relatively affordable and I had used it some time ago for something else. I’ve also experienced outstanding support on their Discord forum. CoyoteMIDI has been available on Windows for a while now, and it’s currently in beta on MacOS. And on Windows, I used the most excellent loopMIDI by Tobias Erichsen to create virtual MIDI cables between Cubase and CoyoteMIDI.

Using CoyoteMIDI and loopMIDI will also allow me to do other future tricks with Cubase and my hardware controllers.

My current hardware controllers (all connected simultaneously):

- Native Instruments Komplete Kontrol Mk2 (has endless encoders and a touch strip)

- Native Instruments Maschine Mk3 (has endless encoders and a touch strip)

- Native Instruments Maschine Jam (has touch strips)

The hardware controllers are connected to CoyoteMIDI and two loopMIDI virtual MIDI cables for each hardware device are used to connect CoyoteMIDI to Cubase. One for each direction.

I’ve named my loopMIDI virtual cables as follows:

- fKK from CoyoteMIDI to Cubase (carries messages from Komplete Kontrol)

- 2KK from Cubase to CoyoteMIDI (carries messages to Komplete Kontrol)

- fM3 from CoyoteMIDI to Cubase (carries messages from Maschine MK3)

- 2M3 from Cubase to CoyoteMIDI (carries messages to Maschine MK3)

- fMJ from CoyoteMIDI to Cubase (carries messages from Maschine Jam)

- 2MJ from Cubase to CoyoteMIDI (carries messages to Maschine Jam)

I’ve developed and tested my script on Windows 10, so I don’t know yet, if it will work on a Mac. My script only receives and sends MIDI, no file or network access or keystrokes. If I’m not mistaken making virtual MIDI cables is already included in MacOS.

So here’s the actual script:

// echoMute.cy muting echoed MIDI events that may cause interference or MIDI storms

event scriptsloaded

// how long does the mute have to last after the last SEND event in seconds

// needs to be just a little higher than the highest latency between SENDING and RETURNING

%muteTime = 0.5

%debug = false

// ROUTES

// ensure that the corresponding sends and returns are in the corresponding positions in their lists

$sendReturnMap new map

// paths for sending and returning messages between hardware to software

// SYNTAX:

// the MAP key is the hardware source device,

// the MAP value is a LIST (destination software device, return from software device, return to hardware device

$sendReturnMap + "Maschine MK3 Ctrl MIDI" : ("fM3", "2M3", "Maschine MK3 Ctrl MIDI")

$sendReturnMap + "Maschine Jam - 1" : ("fMJ", "2MJ", "Maschine Jam - 1")

$sendReturnMap + "KOMPLETE KONTROL - 1" : ("fKK", "2KK", "KOMPLETE KONTROL - 1")

// ===== NO MORE CONFIGURATION CHANGES BELOW THIS LINE ==============

%routeMap new map

%mutedPathsMap new map

foreach $hardwareSourceDevice : $pathDevices in $sendReturnMap

%routeMap + $hardwareSourceDevice : ($pathDevices 1) // send path from hardare to software

%routeMap + ($pathDevices 2) : ($pathDevices 3) // return path from software to hardware

%mutedPathsMap + $hardwareSourceDevice : ($pathDevices 2) // only muting return traffic, manual input from hardware always ok

%muteMap new map // map key: device channel type Map value: time stamp

script process_midi_event

// this function processes outbound MIDI

// object containing the components of a midi message

$midiMessage = $midi_message

$outDevice = ( %routeMap ($midiMessage 1) )

$muteMapKey = (%mutedPathsMap $outDevice) ($midiMessage 2) ($midiMessage 3) ($midiMessage 4)

if ((time) > (%muteMap $muteMapKey))

if (count $outDevice) > 0

if %debug

print "SENDING: " ($midiMessage 2) ($midiMessage 4) ($midiMessage 5) false ($midiMessage 3) $outDevice

midi ($midiMessage 2) ($midiMessage 4) ($midiMessage 5) false ($midiMessage 3) $outDevice

else

if %debug

print "MUTED: "($midiMessage 2) ($midiMessage 4) ($midiMessage 5) 0 ($midiMessage 3) $outDevice

event midi

// serialize the incoming midi event

$midiEvent = ($trigger device), ($trigger type), ($trigger channel)

switch ($trigger type)

case pitchbend

$midiEvent + ($trigger pitchbend)

case channelaftertouch

$midiEvent + ($trigger aftertouchvalue)

case programchange

$midiEvent + ($trigger program)

case note

$midiEvent + ($trigger note)

$midiEvent + ($trigger velocity)

case noteoff // not implemented in CM

$midiEvent + ($trigger noteoff)

$midiEvent + ($trigger velocity)

case controlchange

$midiEvent + ($trigger controlnumber)

$midiEvent + ($trigger controlvalue)

case noteaftertouch

$midiEvent + ($trigger note)

$midiEvent + ($trigger aftertouchvalue)

case default

return !$globalMidiThru

if (%mutedPathsMap ($midiEvent 1))

$muteMapKey = (%mutedPathsMap ($midiEvent 1)) ($midiEvent 2) ($midiEvent 3) ($midiEvent 4) // device type channel number

if ((%muteMap $muteMapKey) != ("")) // update existing entry

%muteMap $muteMapKey = (time) + %muteTime

else // new entry

%muteMap + ($muteMapKey : (time) + %muteTime)

runscript echoMute.cy process_midi_event (midi_message: $midiEvent) false // process the serialized midi event

return true

You may freely copy and adjust the code to your needs and liking. The changes needed are mostly near the top. You’ll need to insert the device names of your controller hardware and the desired length of the mute (my code currently has it at 0.5 seconds).

I will take this page down if Steinberg ever provides a fix within Cubase. Which I still hope will happen one day. 🙂

If you spot an error or omission in this post, you can contact me via the contact form.

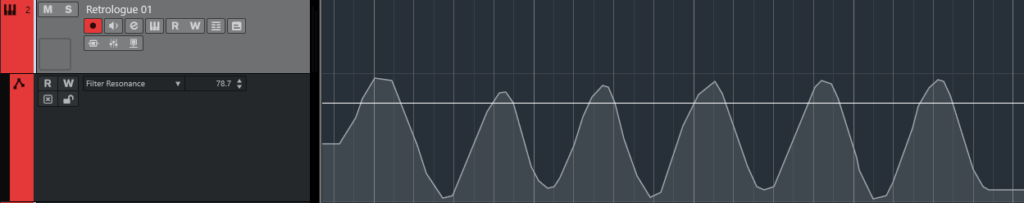

p.s. Oh yeah, when I use my script between my hardware controllers and Cubase, the VST automation looks no longer jagged, but smooth:

p.s. I should also mention MIDI-OX, a classic MIDI utility program for Windows, which I used during the initial investigations of the problem and the development of my script.